WosoM's End-to-End AI Data Collection Pipeline

In artificial intelligence, data is fuel. But not all fuel is equal — poor data can cause bias, errors, or even system failure. At WosoM, we treat data as a strategic asset. Our AI data pipeline isn't just about gathering raw information; it's about crafting rich, reliable, and usable training material tailored to your domain.

1. Finding the Right Clients

We start with deep industry engagement. Whether you're a legal tech company needing case annotations, or a healthcare startup building a diagnosis assistant, we work to understand your data gaps. This client-first approach allows us to tailor sourcing, annotation, and compliance standards to each vertical.

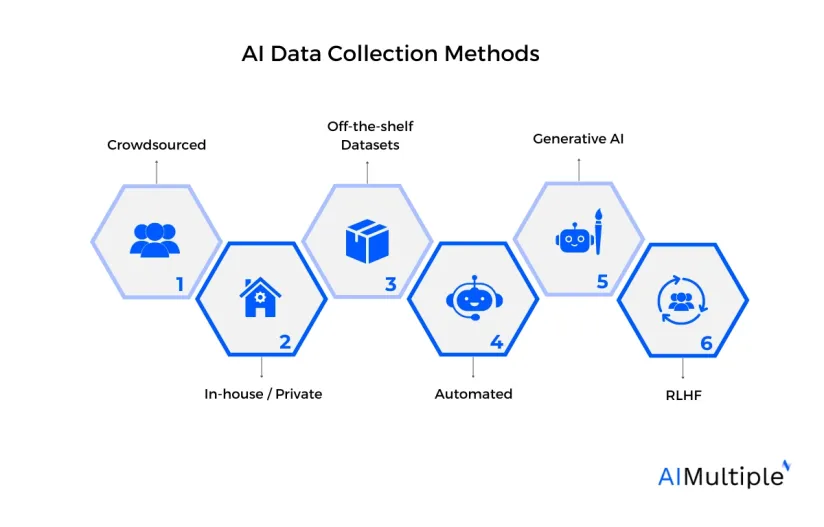

2. Identifying Data Sources

From internal CRMs and support transcripts to scraped web content, public datasets, or crowd-sourced inputs — our sourcing is both ethical and diversified. We vet data origins for bias, drift, and coverage.

- Scanned documents (PDFs, legal contracts)

- Transcribed calls and voice notes

- Structured CSVs and legacy database dumps

- Web data (news, social media, forums)

3. Pre-Cleaning Analysis

Before a single byte is ingested, we analyze data complexity, expected schemas, and language diversity. This step helps us automate schema extraction, encoding normalization, and NLP readiness.

import chardet

with open('contract.pdf', 'rb') as f:

raw = f.read()

print(chardet.detect(raw)) # Ensures encoding is UTF-84. Collection Engine

We design modular, scalable collectors: crawlers, OCR pipelines, form parsers, or audio-to-text converters. Each collector logs errors, tracks versions, and exports raw data for cleaning.

“A bad scraper generates megabytes of noise. A great one gives you gold nuggets of relevance.”

5. Cleaning & Normalization

We remove duplicates, fix encoding, split malformed sentences, unify tags, and convert inconsistent formats. This is where raw text becomes learnable AI material.

from bs4 import BeautifulSoup

clean_html = BeautifulSoup(raw_html, 'html.parser').get_text()

standardized = clean_html.replace('Jan', 'January')6. Validation & Annotation

Our QA teams validate every data slice. We use bounding boxes, named entity rules, and custom taxonomies. Annotation is layered: 1st pass is crowd-verified, 2nd is expert-reviewed.

7. AI Testing & Benchmarking

We don’t wait until deployment to know if data works. We run small experiments on your target model or LLM framework and flag low-impact features or data drifts before delivery.

8. Post-Processing & Scripts

After annotation, we embed metadata, chunk text, tokenize audio, and compress formats based on downstream needs (e.g., HF-compatible JSON, COCO, or CSVs).

{

"text": "Patient is experiencing chest tightness.",

"label": ["symptom"],

"language": "en",

"split": "train"

}9. Final Delivery & Reporting

You receive encrypted delivery along with a full report: data lineage, validation metrics, example outputs, and any observed edge cases.

image credit: AIMultiple

image credit: AIMultiple“Clean data is not just the input — it’s your brand’s output, ethics, and product experience.”